Introduction

Fabric is the latest product from Microsoft which was recently launched. It is a software as a service product. Fabric unifies Data Factory, Synapse Data Engineering, Synapse Data Science, Synapse Real Time Analytics, Power BI and Data Activator in one interface. All the data is stored in Delta Format in “One Lake” one storage.

You can create similar ETL/ELT pipelines in Fabric like Azure Data Factory. Both the platforms provide services for designing, scheduling and managing data workflows. Embarking in the transitional journey from Azure Data Factory to Fabric brings the need for seamless migration plan. In this blog you will see how to migrate existing Azure Data Factory pipelines to Fabric for your data integration need.

How migration of existing Azure Data Factory platform to Fabric would be beneficial?

The reasons you may consider migrating your data to Fabric:

One Lake: One Lake is used as a storage for Fabric. The main benefit of One lake is that once data is loaded into it, it easily integrates with other services like Synapse and Power BI. There is no need to load the data again for these services. The other benefits of One Lake are:

- All the compute engines store their data automatically in One Lake.

- The data is stored in single common format (Delta -parquet).

- One security layer for all the compute engines data.

- It follows One Lake, One Copy rule (there is no duplication of data).

Dataset: There is no need to create dataset in Fabric. You can directly connect with the data source to load the data.

Azure Integration Runtime: In Fabric, there is no use of Azure integration runtime (auto resolve integration runtime).

Pipeline: Data pipelines in Fabric are easily integrated with platforms like Lakehouse and Datawarehouse.

Activities: Data Factory in Fabric supports more activities compared to Azure Data Factory. There are also new additions like Office 365 activity and more.

Publish: In Fabric Data Factory you don’t need to publish your pipeline. Instead, you can use the save button to preserve your pipeline. When you click on the run button, it will save your pipeline automatically.

Monitoring: The monitoring hub in Fabric has more advanced functions which gives you much better insights and experience.

Implementation:

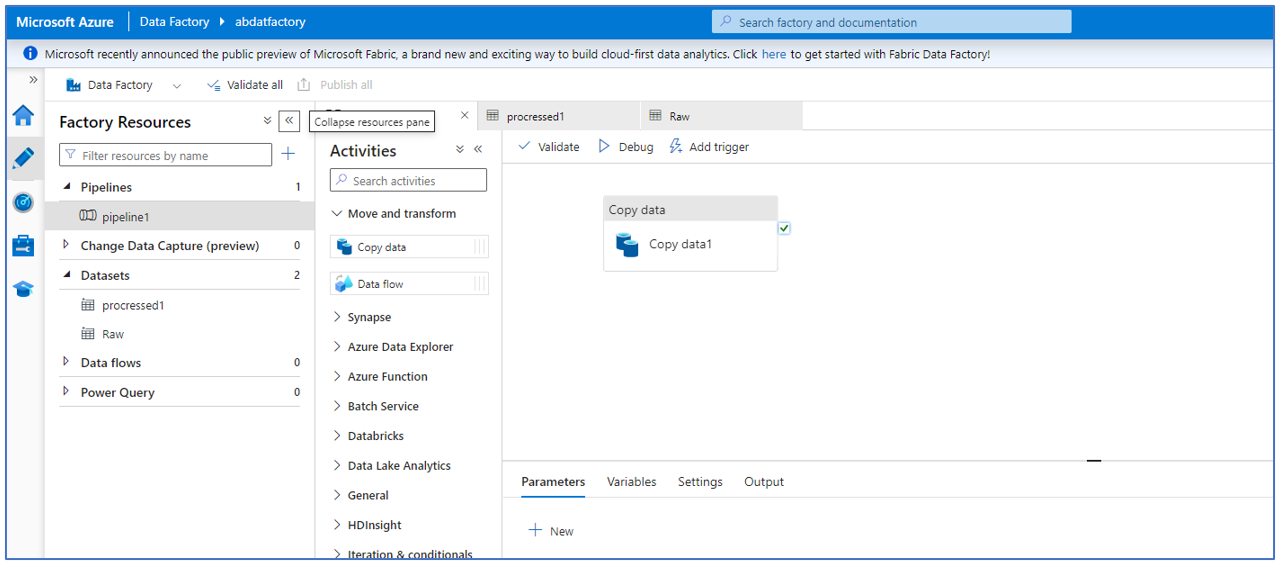

Step 1: Select the data factory pipeline from your Azure Data Factory which needs to be migrated to Fabric.

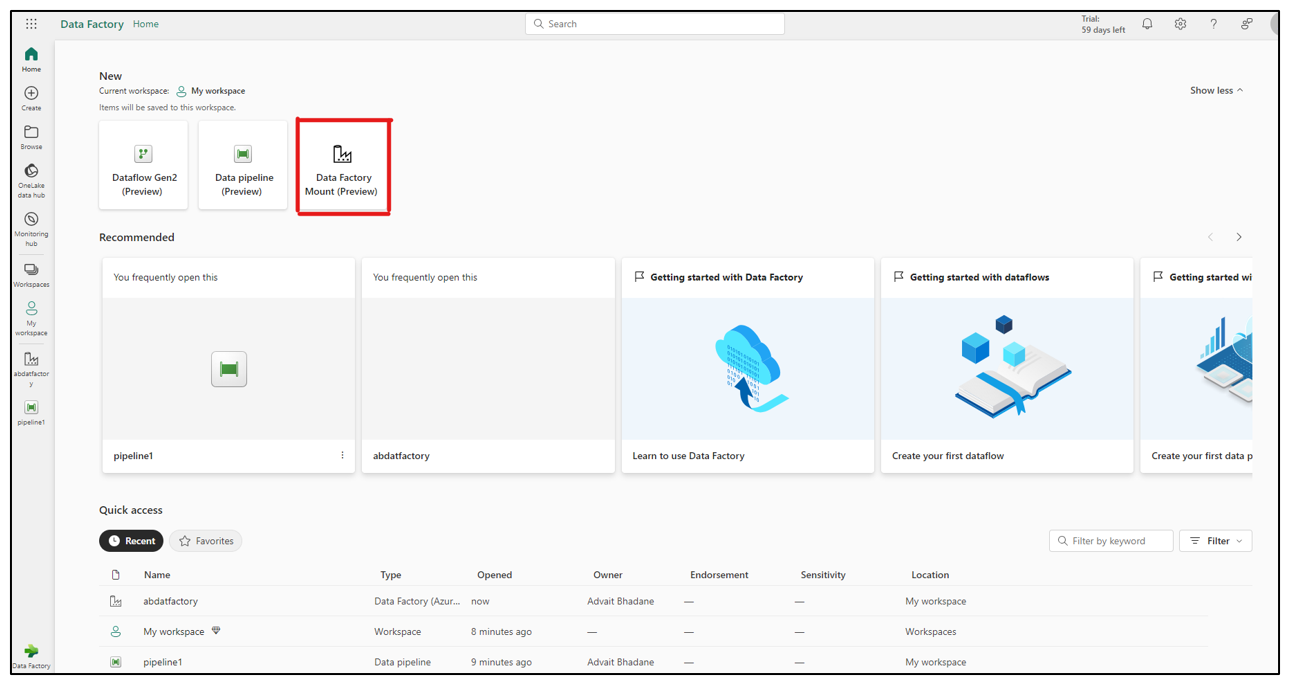

Step 2: Navigate to the “Data Factory” section within Fabric and then click on the “Data Factory Mount” option to access the data mounting functionality.

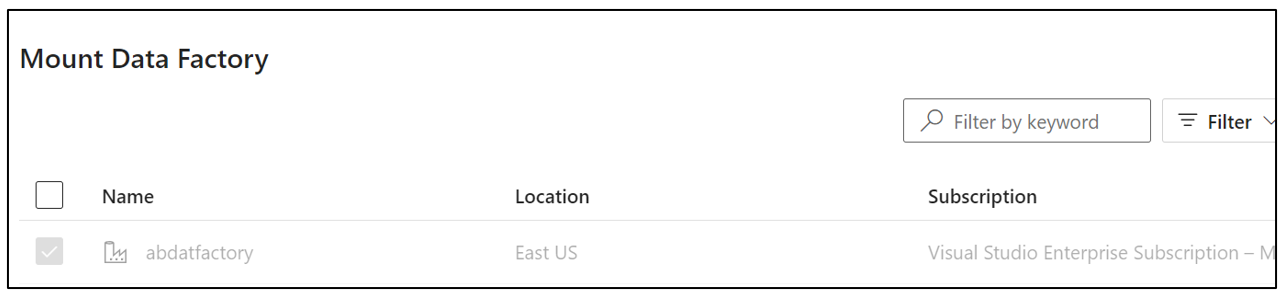

Step 3: You will see all the Azure Data Factory pipelines. Select the specific pipeline which needs to be migrated.

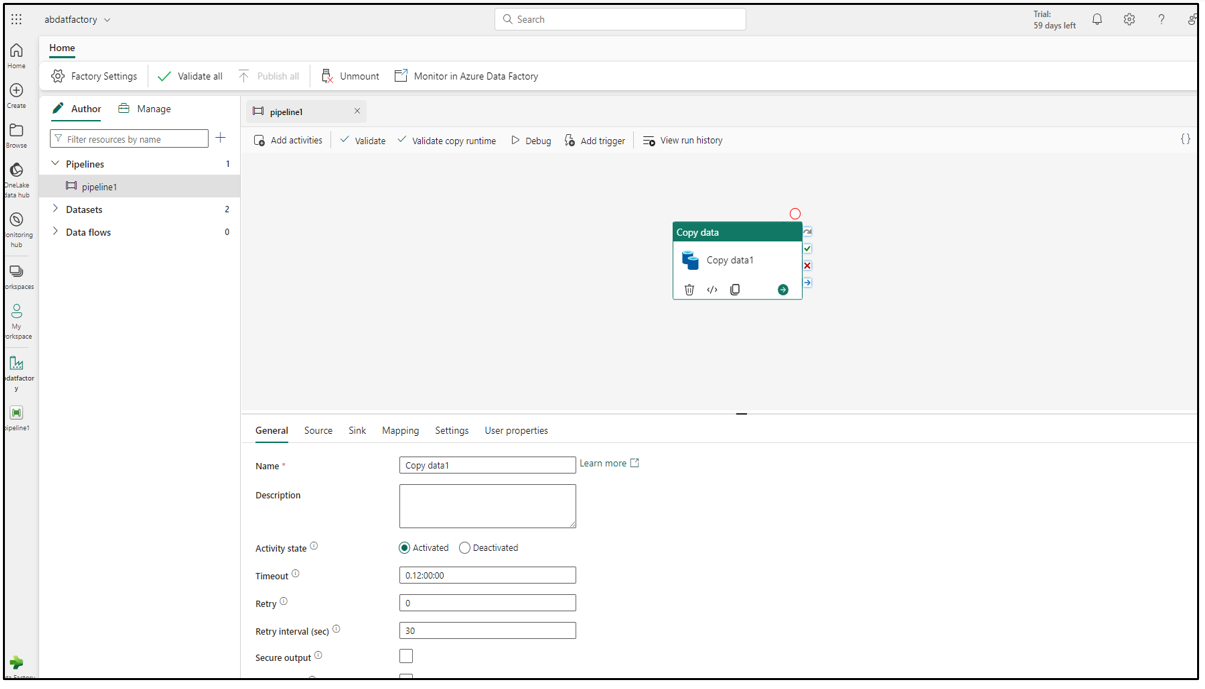

Step 4: If you go to your Fabric workspace, you will be able to see the migrated pipeline.

Final Thoughts:

The seamless migration of Azure Data Factory pipelines to Fabric can be achieved through the mount feature of Fabric. By establishing a connection with your existing Data Factory subscription, this process facilitates a smooth transition, allowing you to effortlessly migrate your current pipelines to Fabric. Embrace the power of Fabric for a unified and efficient approach to data integration and management.