Azure Databricks is a core component of the Modern Datawarehouse Architecture. Its features and capabilities can be utilized and adapted to conduct various powerful tasks, based on the mighty Apache Spark platform. In this blog we are going to see how we can connect to Azure Key Vault from Azure Databricks.

In order to start on this, we need to ensure we have an Azure Databricks service running in Azure with a cluster spun up, and Azure Key Vault service created.

Secrets required in Azure Key Vault

In Azure Key Vault we will be adding secrets that we will be calling through Azure Databricks within the notebooks. First and foremost, this is for security purposes. It will ensure usernames and passwords are not hardcoded within the notebook cells and offer some type of control over access in case it needs to be reverted later on (assuming it is controlled by a different administrator). In addition to this, it will offer a better way of maintaining a solution, since if a password ever needs to be changed, it will only be changed in the Azure Key Vault without the need to go through any notebooks or logic.

In this case, we will be two secrets in Azure Key Vault named:

- “HostTo-API”

- “KeyTo-API”

Setting secret scope in Azure Databricks

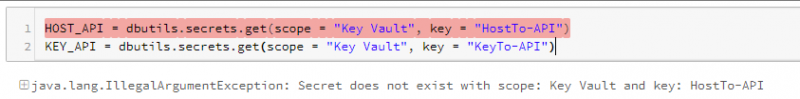

If at this point, we try to use the dbutils.secrets.get() operator to get the secret from Key Vault – we would face an error:

This is because a secret scope needs to be created that will identify a group of secret keys by a collection name.

For a secret scope to be created, the user should have Owner permissions on the Azure Key Vault service that are to be linked to it.

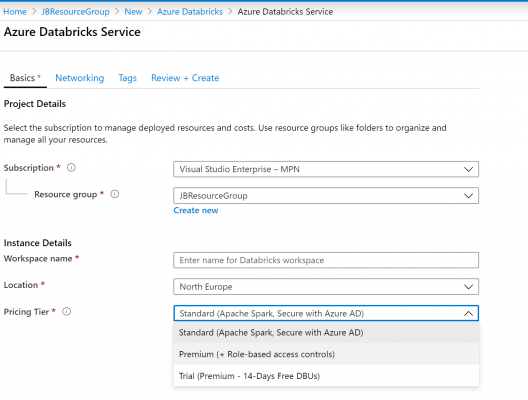

The Azure Databricks service in the first place, should have already been set-up with Premium Pricing Tier, else the following verification error will be given when trying to create a secret scope:

Premium Tier is disabled in this workspace. Secret scopes can only be created with initial_manage_principal "users".

Hence during the creation of the service, the Pricing Tier would need to be changed from the default Standard:

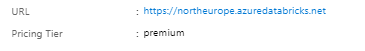

If these are in place, copy the URL of the Databricks instance from the initial service screen:

Then append the following to it and load it through the browser:

/#secrets/createScope

In this case it should hence be:

https://northeurope.azuredatabricks.net/#secrets/createScope

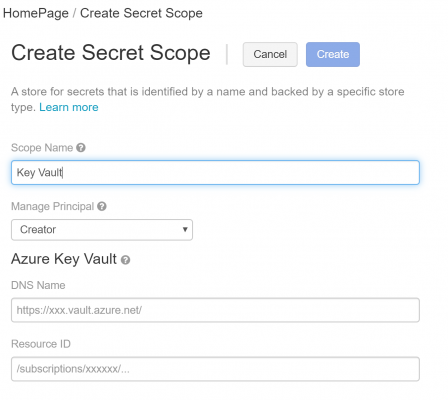

This will lead to the following page:

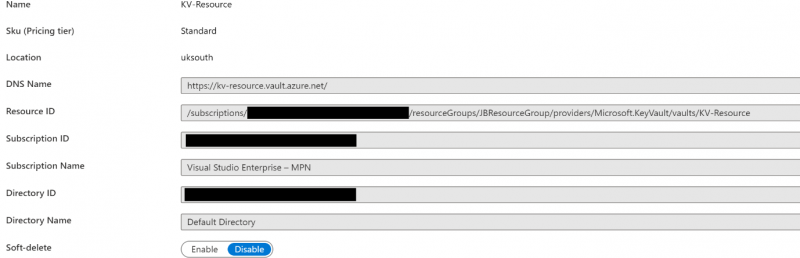

After inputting the Scope Name, in this case I will be using “Key Vault”, the DNS Name and Resource ID details have to be copied from the Properties screen of the Azure Key Vault service and filled in the relevant fields above.

BUT! Hitting create might lead you to another verification error:

Internal error happened while granting read/list permission to Databricks service principal to KeyVault: https://kv-resource.vault.azure.net/

This would need to be solved through the next step.

Creating Service Principals

As with other resources in Azure, a service principal required to allow access to occur between the different resources when secured by an Azure AD tenant. The security principal will outline policies for access and permissions to allow authentication or authorisation for both a user (through a user principal) or applications (through a service principal) in that Azure AD tenant.

And such errors might be common when working on existing client tenancies during projects, and would need to be handled by the Administrator.

Some of the potential options one can apply to solve this are:

- Having the user that is signed in the Azure Databricks elevated to allow creation of service principals. This might be the less suggested solution, since it would mean that same user will now have more rights beyond the scope of this exercise.

- Having the Secret Scope in Azure Databricks created by someone who has such rights already in place (like the Administrator).

Once this is done, you can proceed in creating the secret scope explained in last step. Subsequently the following commands can run within Databricks and be used as parameters as per the below example (here using PySpark):

#Get keys from Azure Key Vault

ENCODED_AUTH_KEY = dbutils.secrets.get(scope = "Key Vault", key = "EncodedAuthKey-RestAPI")

API_URL = dbutils.secrets.get(scope = "Key Vault", key = "ApiUrl-RestAPI")

#Get data from REST API using obtained KV keys

APIResponse = requests.get(API_URL,

headers={

"Authorization": ENCODED_AUTH_KEY

})