This blog was written by Shilpa Jathar on 25th April 2024

What is DALL·E?

DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies. DALL·E is a 12-billion parameter version of GPT-3. It is trained to generate images from text descriptions (prompts), using a dataset of text and image pairs. Prompts are the sequence of words used to describe the image needed using DALL·E.

DALL·E History

DALL·E – Introduced on 5th January 2021.

DALL·E 2 – Released in January 2022.

DALL·E 3 – Released in October 2023.

DALL·E 2

DALL·E 2 generates more realistic and accurate images with 4x greater resolution than DALL·E. DALL·E 2 can create original, realistic images and art from a text description. It can combine concepts, attributes and styles. It can take an image and create different variations of it. It can combine different concepts, styles and attributes to create visuals taking shadows, reflections and textures into account.

DALL·E 3

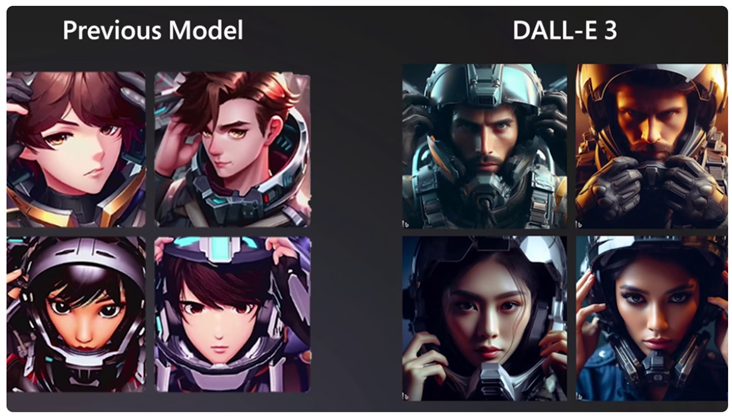

DALL·E 3 translates your ideas into exceptionally accurate images that exactly adhere to the text you provide. DALL·E 3 delivers significant improvements over DALL·E 2, even using the same prompt.

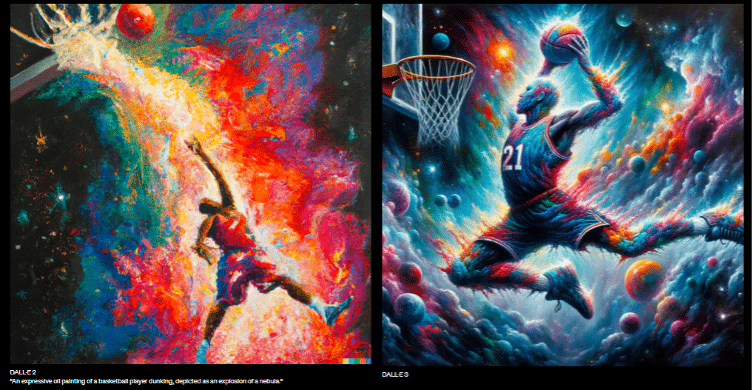

DALL·E 2 Vs DALL·E 3

Prompt: An expressive oil painting of a basketball player dunking, depicted as an explosion of nebula.

DALL·E in ChatGPT

When prompted with an idea, ChatGPT will automatically generate detailed prompts for DALL·E 3 that bring your idea to life. You can ask ChatGPT to make changes with just a few different words. DALL·E 3 is available to ChatGPT Plus and Enterprise customers.

DALL·E in Paint Cocreator

Microsoft Windows 11 has a new feature called Paint Cocreator that lets you collaborate with a powerful AI model – DALL·E.

DALL·E can generate diverse and realistic images from any text description you enter. Whether you want to draw an animal, bird or anything else, Cocreator will help you make your own artwork with the help of AI. To use Cocreator, open Microsoft Paint, select the Cocreator icon on the toolbar and enter a description of the image in the text box.

Current Limitations

Cocreator is currently only available in the following regions – United States, France, UK, Australia, Canada, Italy, and Germany. Only the English language is supported for this feature.

DALL·E in Bing Copilot Designer

Designer is a free AI-powered tool to generate unique, customisable images with prompts. It generates four images with a given prompt. It can be used to design birthday cards, artwork, interior design ideas, creative presentations and much more. DALL·E 3 and Designer offer better image quality and a user-friendly interface.

Powered by DALL·E 3, Bing Copilot Designer helps you generate images based on words with AI. If you would like to create your own images using Bing Copilot Designer, you can do so here https://www.bing.com/create

To create an image using Bing Copilot Designer, go to the above URL and enter prompts to generate an image.

Examples

Prompt: A laptop with a drawing of a human brain inside with the caption ‘AI’ on it.

A woman has a laptop and books around her in an office.

DALL·E 3 in ChatGPT vs DALL·E 3 in Bing copilot designer

While both ChatGPT and Bing Chat offer AI-powered image generation, ChatGPT restricts this feature to its Plus and Enterprise subscribers. DALL·E 3 in ChatGPT is integrated with the chat service, allowing users to refine prompts through conversation with the AI, which can lead to more tailored image results. Each platform has its own strengths and may be better suited for diverse types of image generation tasks.

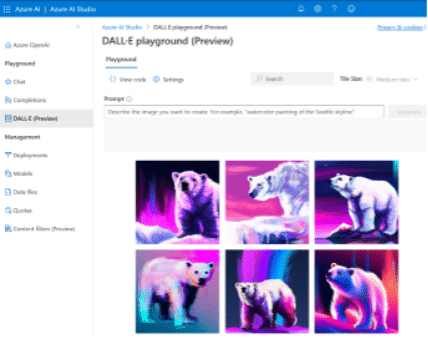

DALL·E in Azure

For using DALL·E in Azure, an Azure OpenAI resource must be created in specific regions – for example – Sweden Central region. Access must be granted to DALL·E for the subscription. Then, you need to deploy a DALL·E model with your Azure resource.

To use DALL·E in Azure, go to Azure OpenAI Studio, browse to Azure OpenAI Studio and sign in with Azure subscription. Enter your image prompt into the text box and select Generate. When the AI-generated image is ready, it appears on the page.

How to use DALL·E?

Using DALL·E effectively is a skill. Here are few tips on how to use DALL·E:

- Use the seed numbers in your character generation. It helps in keeping consistency in the characters you generate.

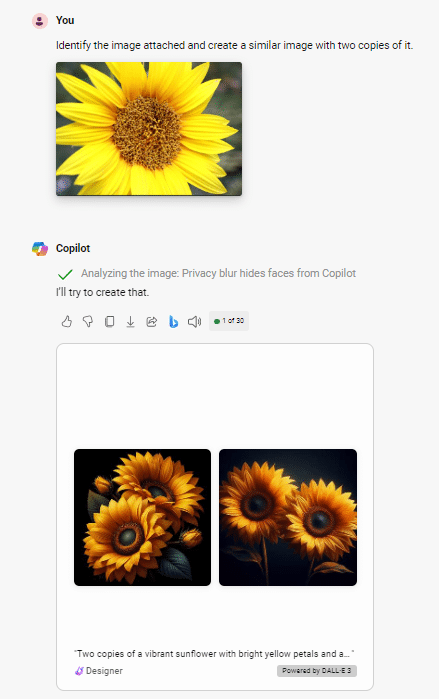

- In ChatGPT as well as in Copilot designer, you can upload existing images, optionally resize them, and then place them within the canvas to bring additional imagery into the scene. To generate similar pictures – upload a picture and tell DALL·E to explain the features and ask it to generate similar pictures. This can be achieved by following steps:

- Open ChatGPT DALL·E 3 or Copilot Designer services.

- Use the attachment/image icon to upload your image.

- After uploading, provide a prompt to guide AI to generate similar images.

- It will create images based on your uploaded picture and the prompt.

For example,

Prompt: Identify the image attached and create a similar image with two copies of it.

Prompt generated: Two copies of a vibrant sunflower with bright yellow petals and a dark background.

- Use descriptive words. E.g., forest – ancient mystery forest.

- Specify lighting – day or night, sunny or cloudy.

- Specify perspective – closer look, bird’s eye view, side view.

- Focus on every element of a prompt.

- Avoid overloading the prompt with information. Keep it balanced.

How does DALL·E work?

It decomposes the problem of image generation into small, discrete steps.

Dataset Recaptioning

Text-to-image models are trained on a dataset composed of a large quantity of pairings (t, a) where i is an image and t is text that describes that image. In large-scale datasets, t is generally derived from human authors who focus on simple descriptions of the image subject and omit background details like a stop sign along a sidewalk. These shortcomings can be addressed using synthetically generated captions.

Building an Image Captioner

A tokenizer is used to break strings of text into discrete tokens. Once decomposed in this way, the text portion can be represented as a sequence – t = [t1, t2, . . ., tn]. We can then build a language model over the text.

More mathematical functions are applied to it using different parameters. To turn this language model into a captioner, you need only to condition the image, so a compressed representation space is needed. ChatGPT then follows the methods of Yu et al. 2022a – https://cdn.openai.com/papers/dall-e-3.pdf (data augmentation and data transfer) and jointly pre-train our captioner with a CLIP (Contrastive Language–Image Pre-training) and a language modelling objective using the above formulation on our dataset (t, i) of text and image.

Data augmentation is a technique of artificially generating new data from existing data, primarily to train new machine learning models by creating new samples through various transformations such as rotation, scaling and flipping, to improve a model’s generalisability and robustness.

Transfer learning is a technique where a pre-trained model, often on a large-scale dataset is fine-tuned for a different but related task or dataset to improve generalisability.

Fine-tuning the captioner

OpenAI builds a small dataset of captions called “short synthetic captions” that describe only the main subject of the image. We then continue to train our captioner on this dataset. OpenAI repeats this process a second time, creating a dataset of long, highly descriptive captions describing the contents of each image in our fine-tuning dataset. These captions describe not only the main subject of the image, but also its surroundings, background, text found in the image, styles, colouration, etc. Then, they fine-tune our base captioner on this dataset.

Evaluating the re-captioned datasets

Computing CLIP Score – a reference-free metric that can be used to evaluate the correlation between a generated caption for an image and the actual content of the image. DALL-E 3 is trained to use synthetic captions.

DALL·E Capabilities and Use Cases

DALL·E is useful to the following teams:

- Sales team

- Marketing team

- Design team

- Technical team

Design Team –

DALL·E can be used to create impactful web pages to ensure client’s websites look and feel professional, appealing to the website’s target audience.

Using DALL·E for web design can be a game-changer. It allows the creation of high-quality images, saving time and resources while delivering professional visuals.

Prompt: Create an image of a software office with sleek computers and a group of software engineers.

Marketing Team- Crafting an event poster for a marketing campaign with a specific title. Simple games can be created using DALL·E to engage clients at different conferences.

Prompt: Design an event poster for a marketing campaign and name it “New Era of Open AI”.

(Image type – watercolour)

Sales Team

The sales team can categorise clients in various categories. For example, Airline businesses, Educational Projects, Healthcare and more. Create a page with a description of each category on the website for prospects and it can be used while talking to future leads/clients explaining the nature of work in the company for several types of projects. The page can include interesting 3-D looking images which are appealing to the client. While creating websites, DALL-E can be used as a part of web designing. These websites can be used by sales teams for approaching future clients.

Technical blog writing

Images generated using DALL·E can take your blog post to the next level. DALL·E can generate high-quality, unique images that you can use for your technical blogs, like the images used in this blog.

Limitations

Spatial awareness

While DALL·E 3 is a significant step forward for prompt following, it still struggles with object placement and spatial awareness. For example, using the words “to the left of”, “underneath” and “behind” can be unreliable.

Text rendering

When building our captioner, ChatGPT paid special attention to ensuring that it was able to include prominent words found in images in the captions it generated. As a result, DALL·E 3 can generate text when prompted. During testing, it was noticed that this capability is unreliable as words are missing extra characters. When the model encounters text in a prompt, it sees tokens that represent whole words and must map those to letters in an image.

Specificity

Synthetic captions are prone to hallucinating important details about an image. Synthetic captions are formed using synthetic text. Synthetic text is artificially generated text, which is the data generated using algorithms approximating original data. This data can be used for the same purpose as the original.

Permissions/Copyrights

As per the details on the website – https://openai.com/dall-e-3, images you create with DALL·E 3 are yours to use and you don’t need our permission to reprint, sell or merchandise them.

Research

https://cdn.openai.com/papers/dall-e-3.pdf

Useful links/References

- https://openai.com/dall-e-3

- https://oai.azure.com/

- https://learn.microsoft.com/en-us/azure/ai-services/openai/dall-e-quickstart?tabs=dalle3%2Ccommand-line&pivots=programming-language-studio

- https://support.microsoft.com/en-us/windows/use-paint-cocreator-to-generate-ai-art-107a2b3a-62ea-41f5-a638-7bc6e6ea718f

- https://help.openai.com/en/articles/6516417-dall-e-editor-guide

Note: Images created in this blog post are just examples. They are created using Bing Copilot powered by DALL·E.