Logic Apps recently introduced the ability to connect to an Azure Data Factory V2 instance and perform a number of actions including cancelling a pipeline, invoking a pipeline and also interrogating the service for information about a particular pipeline run. This blog will focus on how to utilise the full potential of ADF V2 via a Logic App connector when calling a pipeline. In its current form there is no way to provide input parameters to ADF from Logic Apps, thereby hamstringing one of the best features about ADF V2! Later in this blog I will show how to get around that. Despite this there are a number of reasons why a Logic App calling an ADF V2 pipeline with a simple activity is a great thing and I have gone in to some detail below.

Event Driven Execution

The Azure Data Factory V2 team are now starting to bring in event driven triggers but currently this is limited only to the creation or modification blobs in a blob storage account. This is a good start but pales in comparison to the overwhelming number of events that can trigger a Logic App, notably things like Service Bus, Event Grid, HTTP calls etc etc… the list goes on! More info on Logic App connectors here. By utilising Logic Apps as a wrapper for your ADF V2 pipelines you can open up a huge amount of opportunities to diversify what triggers a pipeline run.

Simplifying Loops, Conditionals and Failure Paths

In addition to event driven triggers, the ADF team have also brought in an IF activity and a number of looping activities which are really useful in a lot of scenarios. However, This kind of logic can be simplified when its built in Logic Apps. The visual editor in Logic Apps makes understanding the flow of a loop or an IF seem much simpler to those maybe not so familiar with the business logic that’s been implemented. Additionally failure paths can be handled much more efficiently and clearly with many more options for logging or notifying of failure right out the box – e.g. the Send Email activity.

Heavy lifting of Data

Logic Apps, for all its benefits, is definitely not a heavy lifter of data. They really excel at lightweight messaging and orchestration whereas Data Factory is great at moving big chunks of data when using the Copy activity. When you pair these two together you get something that resembles SSIS Control Flow (Logic Apps) and SSIS Data Flow (Data Factory). Now that they can be closely and easily integrated it makes orchestrating the logical flow and movement of data in the cloud much simpler.

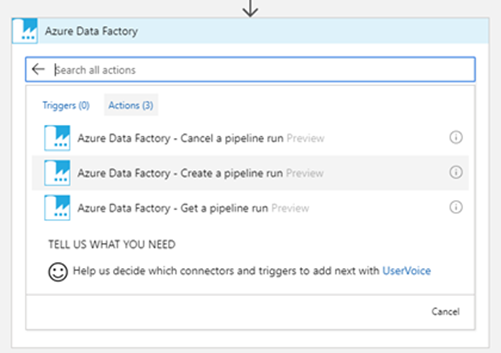

So now we know that pairing Logic Apps and Data Factory V2 is a great idea, lets look at how to do it. Understandably there are no triggers from Data Factory at this point so you will need to trigger your Logic App in any one of the million ways that are available. Once you have your trigger sorted you can search for the Data Factory connecter and choose the action you need. You will then need to connect to the Data Factory service as below by logging into your Azure tenant.

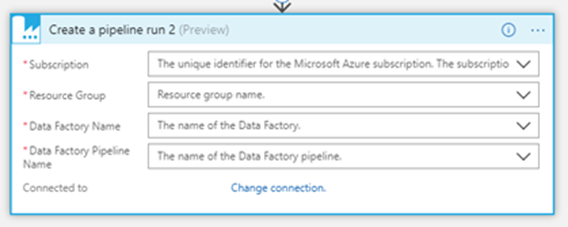

Once logged in you will need to supply a few details to locate the Data Factory instance and pipeline as below.

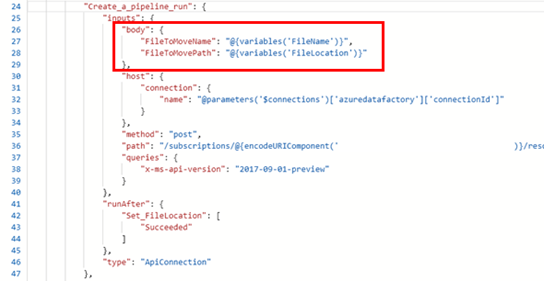

Now you have completed this you can trigger your Logic App and you will see that your Data Factory pipeline will be invoked as normal. However, by using only this method we are depriving ourselves of the ability to derive parameter values outside of Data Factory and pass them in at execution time. Currently there is no where to supply the input data! This is where the blog gets a bit hacky and we will venture into the Code View of the Logic App. [Side note: you can usually get around a lot of tricky situations by manipulating the code in logic apps and not just relying on what the UI gives you] Click the “Code View” button in the designer and then locate your Data Factory V2 activity definition in the code page. It will look something like this (I have whited out my subscription id)

In order to pass in data to this Data Factory pipeline, all we need to do is add a “body” attribute within the “inputs” object like the below image. We can then reference any of the variables called out in the Logic App or any other value that might be available to us. In case you’re wondering, these input values need to configured as input parameters to the Data Factory Pipeline you will be calling. More on how to do that here

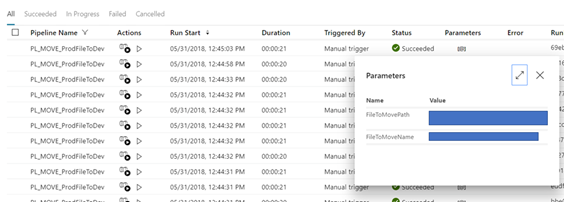

Once you have updated your code accordingly then save and run your logic app and you should be able to see the pipeline invoked with your inputs in the Data Factory service as below.

In conclusion, the marriage of Logic Apps and Data Factory is a happy and harmonious one with many benefits to be exploited. Now armed with the key to utilise input parameters for pipelines called from Logic Apps you can cater for a vast amount of data integration scenarios that require complex but clear logic and heavy lifting of data. Any questions, get in touch or catch me on twitter @MattTheHow.