Having recently passed the Databricks Certified Data Engineer Professional exam, this blog post covers some top tips for passing it. I was looking for a guide like this myself when studying and couldn’t find one, so this post intends to fill that void.

1] Pass the associate exam first

If you haven’t passed the Databricks Certified Data Engineer Associate certification exam, then I’d advise taking that first. In general, it is a lot easier and will provide you with the foundations for the professional exam. I found the suggested Data Engineering with Databricks training course provided by Databricks was sufficient to pass.

2] Study the exam guide

I found that the exam guide found here, did nearly comprehensively cover all the topics that the questions were based on. However, it does not go into the level of detail of what you are expected to know about each topic – use it as a starting point for each.

3] PySpark & Spark SQL experience

If you have hands-on experience with PySpark then ignore this step.

Just navigating the Databricks documentation and learning paths alone will not give you the baseline knowledge of PySpark needed for the exam. It also expects some base level knowledge of SQL / Spark SQL e.g. joins, unions etc.

Therefore, I would recommend taking a short introductory course on PySpark. I would recommend this course provided by Databricks.

As with all courses of this nature, follow along with your own Databricks instance rather than just watching the videos.

4] You need prior experience in Databricks

I wouldn’t recommend anyone without around a years worth of experience in Databricks to take this exam. The exam touches on a lot of different functionalities within Databricks and expects you to be familiar with all aspects of the UI, which I feel can only be gained from having worked extensively with it – after all, it is a professional level exam.

5] Databricks advanced engineering course

Take the Advanced Data Engineering with Databricks course that is recommended and made by Databricks.

The course is very good, and I would suggest running through it and taking time to understand each piece of code. However, I wouldn’t get too bogged down in it after you have completed it, as I found other materials were needed to provide me with the extra information I needed for the exam.

6] Optimising Apache Spark on Databricks course

Although not included in the related training for the exam, I would thoroughly encourage you to run through the Optimising Apache Spark on Databricks course provided by Databricks. It gave me a great understanding of optimisations in Databricks, how to troubleshoot failures and fixing slow running queries. It equipped me with all the information I needed to answer the exam questions on this topic (other than on the Ganglia I mean UI). It covers more than what is necessary but the most important thing when studying for these exams is to improve your knowledge and this course certainly did for me – the piece of paper at the end of it is a bonus!

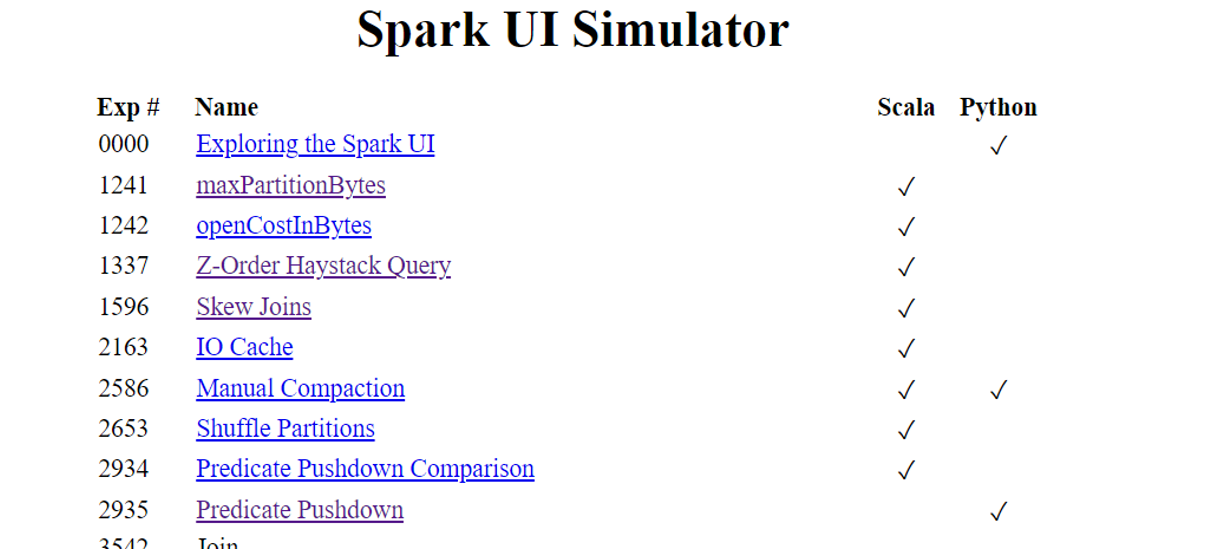

The course runs through various examples from the Spark UI Simulator and vastly improved my knowledge in this area. The Spark UI Simulator by Databricks is a learning tool that simulates the execution of a Spark job in Databricks via an interactive web browser.

7] Ganglia UI

I saw that drawing conclusions from the Ganglia UI was part of the exam guide. However, I couldn’t find any useful material within the learning path or in the Databricks documentation.

8] Specific subject areas

This tip goes a bit more into detail about specific subject areas I recommend you concentrate on.

- Using the REST API

As mentioned in step 2, the exam guide does mention almost all the topics that come up in the exam, but I feel I was least prepared for the questions relating to the REST API.

Specifically, there was questions on using the REST API to get the information for multi-task jobs and it was expected that you would know the output of specific REST API calls.

- Structured Streaming

I personally found this the most challenging since I hadn’t used it in a practical sense, my only experience was the examples included in the training course. So, on top of this, I tried to recreate some simple scenarios in a notebook to better understand how it works.

Expect to receive quite a lot of questions on Structured Streaming, including specific questions on Auto loader.

- Deep clone and shallow clone

Properly understand how the deep clone and shallow clone work. Understand what happens when different modifications are made to the table that is cloned from or to the cloned table.

- MLflow how to use a model to generate predictions

Learn how to use commands to work with MLflow models. I suspect if you have had any experience with MLflow then the question I had on this would have been very simple.

- Permissions in Databricks

Understand how permissions are applied and what permissions are needed for different use-cases for different workspace-level (clusters, notebooks etc) and data securable objects (tables, views etc). This was not included in the exam guide but there were a couple of questions on this.

- Databricks Data Engineering Professional Practice tests

YouTube had a few example questions that were useful in preparing for the exam. I thought some of the provided answers were incorrect but by formulating the answers yourself it will really help cement the knowledge gained from other learning materials and it will lead you to useful areas of Databricks documentation (see next tip) that you need to study. It will also expose areas you are less confident in and that require more learning.

There are a bunch of practice tests you can pay for on udemy, but I didn’t purchase these, so I can’t personally attest to their quality.

- Databricks documentation

The Databricks documentation is a comprehensive and useful tool. I would suggest using it for looking deeper into subject areas not covered in detail by other learning materials, some of which are included in tip 8, particularly the Databricks Rest API.I also found it useful to navigate these when trying to analyse the answers to the different example questions.

You could probably pass the exam just by having some experience with Databricks and trawling through the entire relevant sections of the Databricks documentation, but rather you than me.

- Exam itself

I would suggest having a spare laptop on hand to be able to use if, for whatever reason, you can’t load the exam on your preferred laptop.

If this does happen, don’t panic. Take photos of your screen being stuck loading in case you completely miss your exam. After trying for over 20 minutes, it finally worked, and I was still given the allotted 2 hours to complete it.

Strict invigilators. Ridiculously, my exam was paused by the invigilator to tell me I wasn’t allowed to drink water during the exam. So, prepare to go thirsty for 2 hours.

My exam also kept on getting paused for looking away from my computer, they suggest you close your eyes if you want to look away from your screen!

If you don’t have any prohibited materials near you, you don’t need to worry. I was pulled up enough times for them to ask me to use my webcam to video the entire room, mid exam.

Good luck!