For a while now the data engineering landscape in Azure has been changing but in 2019 it seems to finally feel a bit more grounded with so many Azure products maturing. As such, Microsoft released the Azure Data Engineer Associate Certification at the beginning of the year. The requirements for this are DP-200 Implementing an Azure Data Solution and DP-201 Designing an Azure Data Solution.

Yesterday I passed DP-200 and wanted to share some of my thoughts. Existing material online around this course is still quite limited and those that have written up their experiences seem to have taken the Beta. After taking the exam, its format has changed and due to this I thought it was worth a quick post. While I obviously took one flavour of the exam, there’s nothing to say other flavours don’t exist so don’t read into this content word for word. A colleague of mine took it at a test centre and said he had no labs (probably due to how ancient their computers were) but as mine was proctored I can only assume I got a different flavour, either way be prepared! I’ve also tried to be quite candid around the questions and have instead opted to suggest topics to look at.

Exam Format

This exam is quite varied to others that I’ve taken but nothing too out of the ordinary. While the number of questions will probably vary between each instance of the exam, my version had 59 questions and was split accordingly:

- Scenario Yes / No – 9 questions

- Multiple Choice – Single/Multi Answer, Drag Drop Order, and Hot Area – 30 questions

- Labs done within a Virtual Machine – 12 tasks

- Case Study – Multiple Choice – 8 questions

You have a total of 210 minutes for this exam!! 30 minutes of this is for the NDA and feedback which means you have 180 minutes to answer all the questions. This equates to roughly 3 minutes per question/task. Its worth bearing this in mind since once you have been through some sections you can not return. This happens at least 3 times in this exam between the different sections. A clock is provided in the corner with the current question you are on to help you gauge where you are roughly.

I’ve focused on the labs section below, but other than this the experience is pretty much what you’d expect from multiple choice style questions. There is no need to write code for any of this.

I usually do my exams proctored through Pearson Vue and find the experience pretty good. They are sufficiently strict with the invigilating process which gives you confidence they cannot be cheated. Thankfully they have improved the exam release process and rather than scanning your room with your webcam which was slightly awkward to do on a laptop, you now have to take pictures through your mobile for workspace verification.

General Exam Tips

Before getting into the content, I thought it was worth calling out a few tips that apply to any exam you are taking.

Firstly, I would mark questions you are unsure for review and come back to these. Sometimes it takes a while for the brain to warm-up or future content to trigger thoughts related to previous questions.

Another tip which I often hear and is completely valid is around reading the answers before the question and the surrounding context. This helps set the scene when reading the detail and you will subconsciously be thinking in the background of the possible solutions.

And finally, if you have done Microsoft exams before – think Microsoft when answering questions and consider the terminology they use! If you think the question talks about something which can be achieved by 2 pieces of technology then work out what keywords you would relate to each and see how this is written in the question. The classic example of this is Azure Storage and Azure Data Lake Storage – there are keywords associated to both you need to look out for despite sharing very common principles.

Labs

Probably the sole reason for writing this blog was that I wasn’t expecting labs as part of this exam! As far as I can see its not mentioned anywhere on the official pages and as mentioned previously, the current reviews of the exam (which I used to prepare) seem to have been done in the beta state which obviously didn’t include this.

For the labs, you are given a Virtual Machine (VM) to login to with an Azure account provided for you. This follows a very similar environment to those that have been through Microsoft Learn courses which provide sandbox subscriptions for you to follow exercises. This is very much a practical hands on experience for the exam in which you need to follow tasks to build/configure Azure resource. This should not be particularly difficult given the complexity around other areas of the exam. Having said that, I spend quite a bit of time in the Azure Portal so know my way around.

Without alluding to too much in this area its worth noting a number of things:

- The VM you are given seems to be setup under the American format, thus trying to login using an account with @ ends up as ” using a UK keyboard. You can either change the keyboard layout in the VM or use the ” on a UK keyboard to generate the @ on screen. Not ideal for exam conditions 🙂

- One or two of my tasks were quite vague and I wasn’t completely sure of the state in which the configuration needed to be for me to complete the task. This is a bit of a shame given there is no advice around this area from Microsoft. For instance I followed the steps but my data source had no data throughput. Was this my issue? Its not clear. I assume this is marked automatically somehow so the presence of what I created should be enough to of got me the marks.

- Others were much more defined and I was confident I had completed the task.

- There is no SSMS installed, so you need to find other ways to achieve its functionality via the portal. This felt a bit alien but I can see what they are alluding to here.

- Read the resource names carefully in both the tasks and Azure. Unfortunately they need to name them to be unique per exam so you find that they are quite hard to read when the environment is new to you.

- You are working with Azure so things take time to create/update and the VM is not particularly responsive compared to your desktop experience. You will need more time than you think to do some of the tasks even though you know what they are asking for.

- If you encounter an error – which is entirely feasible, take a step back and see if what you are doing already exists. In some instances, I think I also found task errors but continued with what I thought the task was asking of me.

- Finally, there’s not going to be marks for best practice so do not worry about naming conventions etc.

What’s actually covered?

As you might expect, its the Azure Data Stack, and all the ins/outs of the various configurations/concepts and use cases for the resources. As dull as it may sound I think reading the documentation for each resource from start to finish (using the hyperlinks below) would cover 100% of the content in some form or another. While this is not particularly interactive in terms of learning, I would skip over content which is familiar between resource. Generally they are interested in testing your understanding of the concepts and whether you would use it in certain scenarios, than understanding 100% of the detail behind a feature.

- Elastic Pools, Managed Instances, Purchase Models & Service Tiers (Hyperscale, etc).

- Performance Monitoring (DMVs, Performance Recommendations, Query Performance Insight, Auto Tuning, Diagnostics Logging, etc).

- Availability Capability (Backups, Geo-Replication, Failover Groups, etc).

- Security & Compliance (Data Masking, TDE, Always Encrypted, Data Discovery, Threat Protection, Active Directory Integration, Auditing, etc).

- On-Prem to Azure (Data Sync, DMS, etc).

- Firewall Rules & Virtual Networks.

- Distributions, Partitioning, Resource Classes, Polybase, External Tables, CTAS, Performance Monitoring (DMVs), Authentication & Security, etc.

- Cloud vs Edge, Inputs, Outputs, UDAs, UDFs, Window Functions, Stream vs Reference, Partitioning, Performance, Scaling.

- Pipelines & Activities, Datasets, Linked Services, Integration Runtimes, Triggers, Authoring/Monitoring Experience.

- Blobs vs ADLS Gen2, Data Redundancy, Security, Performance Tiers, Storage Lifecycle, Migration.

- Keys, Secrets, Certs, and Access Policies.

- Use Cases in Architecture, Terminology, Availability, Consistency, Scalability.

- Sources, Topics, Subscriptions, Handlers, Use Cases in Architecture.

- Use Cases in Architecture.

- Use Cases for APIs, Consistency Levels, Distribution, Partitioning.

- Use Cases in Architecture, Clusters, Secrets, Connecting to Data Sources, Performance Metrics.

- Application, Alerts, Log Analytics.

As documented in a recent update to the exam in June 2019 there seems to be very little requirement to understand the impact of HDInsight in architectures. This has been replaced by much more focus on Databricks which is good to see. You should still understand the basic use cases for this however.The same is true of Data Lake Store Gen1 which briefly makes an appearance but given its now generally been superseded by Gen2 I expect this to phase out.

If you have used any of the above resources for some time then the questions should feel fairly comfortable, i.e. I wouldn’t read the complete Data Factory documentation as I feel like I use it so much I know about all of its niches. Instead focus on tech that you might not use as much, i.e. IoT Hub, Streaming Analytics, Monitor, etc.

How do I prepare?

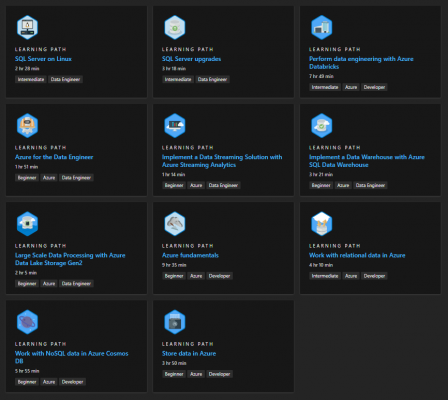

The first port of call for this exam is to cover the content on Microsoft Learn related to being a Data Engineer. This is a new portal that Microsoft have put together to replace the eDX training content that some may be familiar with over the years. The good thing about this portal is that it can be used by both complete novices to Azure and also those more experienced.

In my case, since I work day to day with the technology I quickly zipped through the Learning Paths below. Its suggested this takes about 46 hours to do properly, but without needing to do the exercises (there’s only so many storage accounts I want to create in my life time!!) and skim reading the areas that I was confident in knowing the detail already, I was through in about 3-4 hours. But why am I suggesting zipping through these paths if they are meant for novices? This is because Microsoft does a very good job about condensing the detail around the resources and configuration aspects of resources into only a few paragraphs in each page. The reason why this is good is for key words. If you start to understand how Microsoft pitches the services and functionality you will have a much better ideas when answering questions and they use the exact same language.

This initial approach will let you cover quite a bit of content but it does not go into too much of the Azure resource features. This is where I would suggest reading the Azure documentation above for gaps and further detail. Currently there is no book available for this exam – something which Microsoft usually publishes to help candidates prepare. I expect to see this resource appear soon and this would be preferable than going into so much detail in the documentation. Other than this there is the usual Pluralsight, eDX etc but I feel these are so broad and often only useful to get into a subject rather than concentrate on details.

In total I spent about maybe 10-15 hours preparing and reading around subject areas that I was not familiar with on a day to day basis. This combined with roughly 2-3 years experience in Azure seems to have done the job. I was lucky to have worked across pretty much all the resources questioned in the exam and pulled on this experience. If you have not used for instance Streaming Analytics, I would spin up the resource and start using it and doing exercises with it. There is no substitute for hands on experience with the technology, and you will find this much more useful to reference than trying to remember points made within a paragraph within the documentation.

Conclusion

For any aspiring data engineers, this exam is a fundamental Microsoft qualification to add to your profile. It brings together the Azure Data Stack nicely and gives you an appreciation for how the different services interact with one another and the use cases for one over the other depending upon requirements.

Please feel free to comment and I’ll keep the list of skills updated if I’ve missed anything. Hopefully this is a useful guide if not just for calling out the addition of labs!

** UPDATE **

A week after passing DP-200, I also passed DP-201. It wasn’t worth creating a separate post as the cross over between DP-200 and DP-201 is huge. As expected the difference in format, is the lack of labs in DP-201 but the Yes/No scenarios and the Case Studies still exist. There were also less questions.

In terms of content, while the main focus of DP-200 is implementation (not surprising given the title), the main focus of DP-201 is on design. This means you will get a lot more scenario based questions with particular focus on architecture! i.e. when to use one service over another? In some instances, I managed to get the same question asked twice (around type of storage for a particular solution they were trying to build) which was interesting and in other scenarios in the latter set of questions even alluded to the storage in question for something else! If you are comfortable understanding the scenarios such as SQL DB vs SQL DW vs Cosmos DB etc you should be fine. There is nothing in particular which caught me out (unlike some other exams) but I’d probably suggest security needs the most revision since it has lots of niches which you probably would not implement day-to-day.

I’d argue if you have revised for DP-200, you should do DP-201 very soon after as it covers pretty much the same but with a design twist. Good luck!