To me one of the most exciting parts of the Microsoft Analytics Platform System (APS) is Polybase, which in a nutshell allows you to access data residing in Hadoop or Windows Azure Blob Storage, all via T-SQL in PDW. What this means is that data can be transparently queried by a user or developer, in real time, regardless of whether the data lives in PDW or Hadoop/Azure. James Roland-Jones gives a thorough overview of all things Polybase here.

What I’m going to do in this post is to show an example of how existing data within Hadoop can be combined with data that resides in an APS PDW region.

Polybase Example – Setup

There are two key tasks to complete before we’re able to start querying data. We need to setup a data source, so that Polybase knows where to get the data from, plus we need to describe the file format of the external file that we’re going to read. The data source here specifies that we’re going to use the Hadoop nodes that are co-located with the PDW nodes, but this could be a non-appliance Hadoop cluster:

CREATE EXTERNAL DATA SOURCE HadoopRegion_DataSource WITH ( TYPE = HADOOP, LOCATION = 'hdfs://hdfs://H12345-C-HHN01', --Optional specify a tracker location to enable predicate --push down to Hadoop JOB_TRACKER_LOCATION = 'H12345-C-HHN01:50300' );

The next task is to setup a file format. In this case we’re defining that the file is pipe delimited, although we can use Polybase with other formats (e.g. RCFile):

CREATE EXTERNAL FILE FORMAT HadoopRegion_DelimitedPipe_NoCompression WITH ( FORMAT_TYPE = DELIMITEDTEXT, FORMAT_OPTIONS ( Field_terminator = '|') );

Accessing External Data

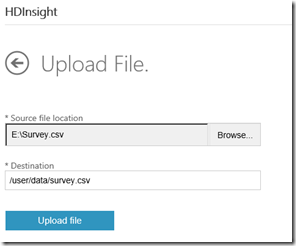

Before we access data in Hadoop, I want to show the file for this example. In this case I’ve got a customer dimension within PDW, but I have customer survey data within Hadoop that i wish to combine with my data in the warehouse. The survey data has been uploaded in this case to the Hadoop region within APS, via the portal:

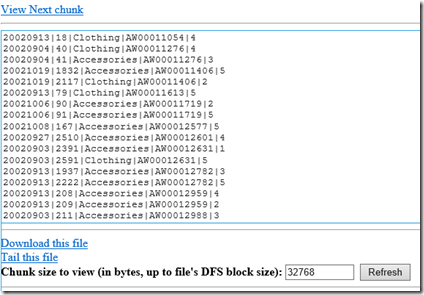

The following shows a sample of the Hadoop file, note that its pipe delimited, with columns for Date, Survey Id, Product, Customer Id and Survey Score:

Now I want to access the Hadoop data and combine it with other data in the warehouse. To do this we need to use the CREATE EXTERNAL TABLE command.

CREATE EXTERNAL TABLE [dbo].[HDFS_CustomerSurvey] ( DateId int NOT NULL, SurveyResponseId int NOT NULL, ProductCategoryName varchar(100) NULL, CustomerId varchar(50) NULL, SurveyResult INT NULL ) WITH ( LOCATION = '/user/data/survey/', DATA_SOURCE = HadoopRegion_DataSource, FILE_FORMAT = HadoopRegion_DelimitedPipe_NoCompression ) ;

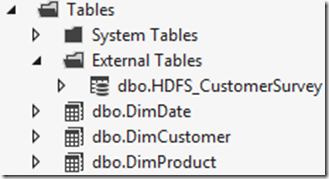

This results in an external table being available within the PDW region, as shown below:

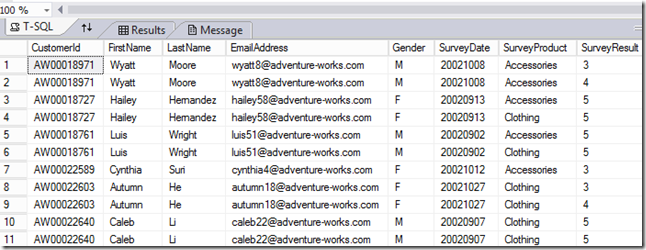

Now if we want to query the Hadoop data it’s just a simple case of T-SQL. Here I’m joining Hadoop survey data to the customer dimension:

SELECT CS.CustomerId, C.FirstName, C.LastName, C.EmailAddress, C.Gender, CS.DateId AS SurveyDate, CS.ProductCategoryName AS SurveyProduct, CS.SurveyResult FROM dbo.HDFS_CustomerSurvey CS INNER JOIN dbo.DimCustomer C ON C.CustomerAlternateKey = CS.CustomerId

Which gives the following results:

So that’s it, just a simple T-SQL query in the end. Polybase has taken away the complexity and allowed us to integrate different data sources using a widely used standard query language.